Word Cookies for PC Archives

Word Cookies for PC Archives

Researching the language

Today's editors have access to a remarkable amount of information from many different sources. An important part of an editor's job is to know where to look most efficiently for information relevant to the entries they are editing.

These are the main sources of information available to editors:

- the OED's own library, card files, and word databases

- libraries and archives (especially the large copyright libraries)

- external online databases and web sites (some subscriber-only)

- academic consultants

Resources at the OED

The OED department at Oxford University Press has its own specialist library, which editors use when researching the history and definition of a word.

The OED department at Oxford University Press has its own specialist library, which editors use when researching the history and definition of a word.

The OED has collected information on the history of words for the last 150 years. The index cards or 'slips' on which this information is collected are stored in the dictionary department in Oxford (and in the nearby archives of the University Press) and are available to editors working on their ranges of words.

In recent years most of the information collected by the OED through its reading programmes has been collected and stored electronically. The most important collection ('Incoming') is available to editors on their pc, who search it regularly for additional details.

This database contains over three million quotations (almost 100 million words) from the main UK and North American Reading Programmes maintained by the OED. The advantage of this database over the traditional method of writing out a quotation on a slip of paper and filing it alphabetically is that any word from a quotation can be matched by a search, not just the 'catchword' that caught the original reader's attention.

This allows the reading programmes to concentrate on highlighting new or unusual words and meanings, since the quotations for these words will also contain a number of common words, which are just as easy to find by electronic searching.

Searches on this database can also be restricted to a particular date range, subject, or location, which can be useful when trying to trace an example of a common word with a specific meaning in.

Researching in libraries

OED editors often need to access the resources of a larger library. In such cases the editor sends a message to a researcher working for the OED at the Bodleian Library in Oxford, the British Library in London, the Library of Congress in Washington, or any of several other libraries.

The main tasks of a researcher working in these libraries are as follows:

- to find extra information needed for the definition of a word.

- to search for the earliest evidence of a word in use, so that the editor can determine as closely as possible when a word entered the language. In some cases, such as scientific terminology, it is often possible to determine exactly when a word was coined, by tracing references in the scientific literature.

- to search for recent evidence of a word, so that the editor can determine whether or not a word has dropped out of use.

- to check in an original text a word that has appeared in a later edition, an abstract, etc.

- to ascertain whether a word is registered as a trade mark, so that the dictionary can record this fact in the definition, if necessary.

Over the last few years there has been an explosion in the number of resources available to lexicographers on the Internet. Of particular interest to OED lexicographers are large full-text historical databases such as Early English Books Online (EEBO) and Eighteenth Century Collections Online (ECCO). The British Library has made available significant runs of British regional newspapers up until 1900; PROQUEST makes available a large number of newspaper sources from the United States of America, which can be supplemented at a regional level by the Newspaperarchive.

Most of these sources are available by subscription only. The emergence of Google Books, however, means that a mass of historical and contemporary material can be searched easily and, from the OED's point of view, extremely usefully.

For the editor, the issue is how to search most efficiently through such a mass of data.

Further advice

Once an entry has reached the stage at which it is almost publishable, it can be sent by the OED to any of hundreds of specialist consultants who advise of the final versions of entries from their areas of expertise. So words from astronomy, mineralogy, sports, heraldry, dance, etc. make one final tour before they return to be passed for publication online.

The OED's specialist consultants number over 400. Responding to enquiries from OED editors constitutes only a small part of their life, but they help to ensure the accuracy and completeness of entries that have made their way through the editorial process.

Bibliographical standardization

Bibliographical standardization

Back to top

Источник: [https://torrent-igruha.org/3551-portal.html]noun

- a long-term storage device, as a disk or magnetic tape, or a computer directory or folder that contains copies of files for backup or future reference.

- a collection of digital data stored in this way.

- a computer file containing one or more compressed files.

- a collection of information permanently stored on the internet: The magazine has its entire archive online, from 1923 to the present.

verb (used with object),ar·chived,ar·chiv·ing.

Origin of archive

Words nearby archive

Words related to archive

Example sentences from the Web for archive

British Dictionary definitions for archive

noun(often plural)

verb(tr)

Derived forms of archive

archival, adjectiveWord Origin for archive

Internet Archive

Coordinates: 37°46′56″N122°28′18″W / 37.782321°N 122.47161137°W / 37.782321; -122.47161137

The Internet Archive is an American digital library with the stated mission of "universal access to all knowledge."[notes 2][notes 3] It provides free public access to collections of digitized materials, including websites, software applications/games, music, movies/videos, moving images, and millions of books. In addition to its archiving function, the Archive is an activist organization, advocating a free and open Internet. The Internet Archive currently holds over 20 million books and texts, 3 million movies and videos, 400,000 software programs, 7 million audio files, and 463 billion web pages in the Wayback Machine.

The Internet Archive allows the public to upload and download digital material to its data cluster, but the bulk of its data is collected automatically by its web crawlers, which work to preserve as much of the public web as possible. Its web archive, the Wayback Machine, contains hundreds of billions of web captures.[notes 4][4] The Archive also oversees one of the world's largest book digitization projects.

Operations[edit]

| This section needs to be updated. Please update this article to reflect recent events or newly available information.(May 2020) |

The Archive is a 501(c)(3) nonprofit operating in the United States. It has an annual budget of $10 million, derived from a variety of sources: revenue from its Web crawling services, various partnerships, grants, donations, and the Kahle-Austin Foundation.[5] The Internet Archive manages periodic funding campaigns, like the one started in December 2019 with a goal of reaching donations for $6 million.[6]

Its headquarters are in San Francisco, California. From 1996 to 2009, headquarters were in the Presidio of San Francisco, a former U.S. military base. Since 2009, headquarters have been at 300 Funston Avenue in San Francisco, a former Christian Science Church.

At one time, most of its staff worked in its book-scanning centers; as of 2019, scanning is performed by 100 paid operators worldwide.[7] The Archive has data centers in three Californian cities: San Francisco, Redwood City, and Richmond. To prevent losing the data in case of e.g. a natural disaster, the Archive attempts to create copies of (parts of) the collection at more distant locations, currently including the Bibliotheca Alexandrina[notes 5] in Egypt and a facility in Amsterdam.[8] The Archive is a member of the International Internet Preservation Consortium[9] and was officially designated as a library by the state of California in 2007.[notes 6]

History[edit]

Brewster Kahle founded the archive in May 1996 at around the same time that he began the for-profit web crawling company Alexa Internet.[notes 7] In October 1996, the Internet Archive had begun to archive and preserve the World Wide Web in large quantities,[notes 8] though it saved the earliest pages in May 1996.[10][11] The archived content wasn't available to the general public until 2001, when it developed the Wayback Machine.

In late 1999, the Archive expanded its collections beyond the Web archive, beginning with the Prelinger Archives. Now the Internet Archive includes texts, audio, moving images, and software. It hosts a number of other projects: the NASA Images Archive, the contract crawling service Archive-It, and the wiki-editable library catalog and book information site Open Library. Soon after that, the archive began working to provide specialized services relating to the information access needs of the print-disabled; publicly accessible books were made available in a protected Digital Accessible Information System (DAISY) format.[notes 9]

According to its website:[notes 10]

Most societies place importance on preserving artifacts of their culture and heritage. Without such artifacts, civilization has no memory and no mechanism to learn from its successes and failures. Our culture now produces more and more artifacts in digital form. The Archive's mission is to help preserve those artifacts and create an Internet library for researchers, historians, and scholars.

In August 2012, the archive announced[12] that it has added BitTorrent to its file download options for more than 1.3 million existing files, and all newly uploaded files.[13][14] This method is the fastest means of downloading media from the Archive, as files are served from two Archive data centers, in addition to other torrent clients which have downloaded and continue to serve the files.[13][notes 11] On November 6, 2013, the Internet Archive's headquarters in San Francisco's Richmond District caught fire,[15] destroying equipment and damaging some nearby apartments.[16] According to the Archive, it lost a side-building housing one of 30 of its scanning centers; cameras, lights, and scanning equipment worth hundreds of thousands of dollars; and "maybe 20 boxes of books and film, some irreplaceable, most already digitized, and some replaceable".[17] The nonprofit Archive sought donations to cover the estimated $600,000 in damage.[18]

In November 2016, Kahle announced that the Internet Archive was building the Internet Archive of Canada, a copy of the archive to be based somewhere in Canada. The announcement received widespread coverage due to the implication that the decision to build a backup archive in a foreign country was because of the upcoming presidency of Donald Trump.[19][20][21] Kahle was quoted as saying:

On November 9th in America, we woke up to a new administration promising radical change. It was a firm reminder that institutions like ours, built for the long-term, need to design for change. For us, it means keeping our cultural materials safe, private and perpetually accessible. It means preparing for a Web that may face greater restrictions. It means serving patrons in a world in which government surveillance is not going away; indeed it looks like it will increase. Throughout history, libraries have fought against terrible violations of privacy—where people have been rounded up simply for what they read. At the Internet Archive, we are fighting to protect our readers' privacy in the digital world.[19]

Since 2018, the Internet Archive visual arts residency, which is organized by Amir Saber Esfahani and Andrew McClintock, helps connect artists with the archive's over 48 petabytes[notes 12] of digitized materials. Over the course of the yearlong residency, visual artists create a body of work which culminates in an exhibition. The hope is to connect digital history with the arts and create something for future generations to appreciate online or off.[22] Previous artists in residence include Taravat Talepasand, Whitney Lynn, and Jenny Odell.[23]

In 2019, the main scanning operations were moved to Cebu in the Philippines and were planned to reach a pace of half a million books scanned per year, until an initial target of 4 million books. The Internet Archive acquires most materials from donations, such as a donation of 250 thousand books from Trent University and hundreds of thousands of 78 rpm discs from Boston Public Library. All material is then digitized and retained in digital storage, while a digital copy is returned to the original holder and the Internet Archive's copy, if not in the public domain, is borrowed to patrons worldwide one at a time under the controlled digital lending (CDL) theory of the first-sale doctrine.[24] Meanwhile, in the same year its headquarters in San Francisco received a bomb threat which forced a temporary evacuation of the building.[25]

Web archiving[edit]

Wayback Machine[edit]

The Internet Archive capitalized on the popular use of the term "WABAC Machine" from a segment of The Adventures of Rocky and Bullwinkle cartoon (specifically Peabody's Improbable History), and uses the name "Wayback Machine" for its service that allows archives of the World Wide Web to be searched and accessed.[26] This service allows users to view some of the archived web pages. The Wayback Machine was created as a joint effort between Alexa Internet and the Internet Archive when a three-dimensional index was built to allow for the browsing of archived web content.[notes 13] Millions of web sites and their associated data (images, source code, documents, etc.) are saved in a database. The service can be used to see what previous versions of web sites used to look like, to grab original source code from web sites that may no longer be directly available, or to visit web sites that no longer even exist. Not all web sites are available because many web site owners choose to exclude their sites. As with all sites based on data from web crawlers, the Internet Archive misses large areas of the web for a variety of other reasons. A 2004 paper found international biases in the coverage, but deemed them "not intentional".[27]

A "Save Page Now" archiving feature was made available in October 2013,[28] accessible on the lower right of the Wayback Machine's main page.[notes 14] Once a target URL is entered and saved, the web page will become part of the Wayback Machine.[28] Through the Internet address web.archive.org,[29] users can upload to the Wayback Machine a large variety of contents, including PDF and data compression file formats. The Wayback Machine creates a permanent local URL of the upload content, that is accessible in the web, even if not listed while searching in the http://archive.org official website.

May 12, 1996, is the date of the oldest archived pages on the archive.org WayBack Machine, such as infoseek.com.[30]

In October 2016, it was announced that the way web pages are counted would be changed, resulting in the decrease of the archived pages counts shown.[31]

| A Using the old counting system used before October 2016 |

| B Using the new counting system used after October 2016 |

Archive-It[edit]

Created in early 2006, Archive-It[33] is a web archiving subscription service that allows institutions and individuals to build and preserve collections of digital content and create digital archives. Archive-It allows the user to customize their capture or exclusion of web content they want to preserve for cultural heritage reasons. Through a web application, Archive-It partners can harvest, catalog, manage, browse, search, and view their archived collections.[34]

In terms of accessibility, the archived web sites are full text searchable within seven days of capture.[35] Content collected through Archive-It is captured and stored as a WARC file. A primary and back-up copy is stored at the Internet Archive data centers. A copy of the WARC file can be given to subscribing partner institutions for geo-redundant preservation and storage purposes to their best practice standards.[36] Periodically, the data captured through Archive-It is indexed into the Internet Archive's general archive.

As of March 2014[update], Archive-It had more than 275 partner institutions in 46 U.S. states and 16 countries that have captured more than 7.4 billion URLs for more than 2,444 public collections. Archive-It partners are universities and college libraries, state archives, federal institutions, museums, law libraries, and cultural organizations, including the Electronic Literature Organization, North Carolina State Archives and Library, Stanford University, Columbia University, American University in Cairo, Georgetown Law Library, and many others.

Book collections[edit]

Text collection[edit]

The Internet Archive operates 33 scanning centers in five countries, digitizing about 1,000 books a day for a total of more than 2 million books,[37] financially supported by libraries and foundations.[notes 28] As of July 2013[update], the collection included 4.4 million books with more than 15 million downloads per month.[37] As of November 2008[update], when there were approximately 1 million texts, the entire collection was greater than 0.5 petabytes, which includes raw camera images, cropped and skewed images, PDFs, and raw OCR data.[38] Between about 2006 and 2008, Microsoft had a special relationship with Internet Archive texts through its Live Search Books project, scanning more than 300,000 books that were contributed to the collection, as well as financial support and scanning equipment. On May 23, 2008, Microsoft announced it would be ending the Live Book Search project and no longer scanning books.[39] Microsoft made its scanned books available without contractual restriction and donated its scanning equipment to its former partners.[39]

Around October 2007, Archive users began uploading public domain books from Google Book Search.[notes 29] As of November 2013[update], there were more than 900,000 Google-digitized books in the Archive's collection;[notes 30] the books are identical to the copies found on Google, except without the Google watermarks, and are available for unrestricted use and download.[40] Brewster Kahle revealed in 2013 that this archival effort was coordinated by Aaron Swartz, who with a "bunch of friends" downloaded the public domain books from Google slow enough and from enough computers to stay within Google's restrictions. They did this to ensure public access to the public domain. The Archive ensured the items were attributed and linked back to Google, which never complained, while libraries "grumbled". According to Kahle, this is an example of Swartz's "genius" to work on what could give the most to the public good for millions of people.[41]Besides books, the Archive offers free and anonymous public access to more than four million court opinions, legal briefs, or exhibits uploaded from the United States Federal Courts' PACER electronic document system via the RECAP web browser plugin. These documents had been kept behind a federal court paywall. On the Archive, they had been accessed by more than six million people by 2013.[41]

The Archive's BookReader web app,[42] built into its website, has features such as single-page, two-page, and thumbnail modes; fullscreen mode; page zooming of high-resolution images; and flip page animation.[42][43]

Number of texts for each language[edit]

| Number of all texts (December 9, 2019) | 22,197,912[44] |

|---|

| Language | Number of texts (November 27, 2015) |

|---|---|

| English | 6,553,945[notes 31] |

| French | 358,721[notes 32] |

| German | 344,810[notes 33] |

| Spanish | 134,170[notes 34] |

| Chinese | 84,147[notes 35] |

| Arabic | 66,786[notes 36] |

| Dutch | 30,237[notes 37] |

| Portuguese | 25,938[notes 38] |

| Russian | 22,731[notes 39] |

| Urdu | 14,978[notes 40] |

| Japanese | 14,795[notes 41] |

Number of texts for each decade[edit]

|

|

Open Library[edit]

The Open Library is another project of the Internet Archive. The wiki seeks to include a web page for every book ever published: it holds 25 million catalog records of editions. It also seeks to be a web-accessible public library: it contains the full texts of approximately 1,600,000 public domain books (out of the more than five million from the main texts collection), as well as in-print and in-copyright books,[45] which are fully readable, downloadable[46][47] and full-text searchable;[48] it offers a two-week loan of e-books in its Books to Borrow lending program for over 647,784 books not in the public domain, in partnership with over 1,000 library partners from 6 countries[37][49] after a free registration on the web site. Open Library is a free and open-source software project, with its source code freely available on GitHub.

The Open Library faces objections from some authors and the Society of Authors, who hold that the project is distributing books without authorization and is thus in violation of copyright laws,[50] and four major publishers initiated a copyright infringement lawsuit against the Internet Archive in June 2020 to stop the Open Library project.[51]

List of digitizing sponsors for ebooks[edit]

As of December 2018, over 50 sponsors helped the Internet Archive provide over 5 million scanned books (text items). Of these, over 2 million were scanned by Internet Archive itself, funded either by itself or by MSN, the University of Toronto or the Internet Archive's founder's Kahle/Austin Foundation.[52]

The collections for scanning centers often include also digitisations sponsored by their partners, for instance the University of Toronto performed scans supported by other Canadian libraries.

| Sponsor | Main collection | Number of texts sponsored[52] |

|---|---|---|

| [1] | 1,302,624 | |

| Internet Archive | [2] | 917,202 |

| Kahle/Austin Foundation | 471,376 | |

| MSN | [3] | 420,069 |

| University of Toronto | [4] | 176,888 |

| U.S. Department of Agriculture, National Agricultural Library | 150,984 | |

| Wellcome Library | 127,701 | |

| University of Alberta Libraries | [5] | 100,511 |

| China-America Digital Academic Library (CADAL) | [6] | 91,953 |

| Sloan Foundation | [7] | 83,111 |

| The Library of Congress | [8] | 79,132 |

| University of Illinois Urbana-Champaign | [9] | 72,269 |

| Princeton Theological Seminary Library | 66,442 | |

| Boston Library Consortium Member Libraries | 59,562 | |

| Jisc and Wellcome Library | 55,878 | |

| Lyrasis members and Sloan Foundation | [10] | 54,930 |

| Boston Public Library | 54,067 | |

| Nazi War Crimes and Japanese Imperial Government Records Interagency Working Group | 51,884 | |

| Getty Research Institute | [11] | 46,571 |

| Greek Open Technologies Alliance through Google Summer of Code | 45,371 | |

| University of Ottawa | 44,808 | |

| BioStor | 42,919 | |

| Naval Postgraduate School, Dudley Knox Library | 37,727 | |

| University of Victoria Libraries | 37,650 | |

| The Newberry Library | 37,616 | |

| Brigham Young University | 33,784 | |

| Columbia University Libraries | 31,639 | |

| University of North Carolina at Chapel Hill | 29,298 | |

| Institut national de la recherche agronomique | 26,293 | |

| Montana State Library | 25,372 | |

| Allen County Public Library Genealogy Center | [12] | 24,829 |

| Michael Best | 24,825 | |

| Bibliotheca Alexandrina | 24,555 | |

| University of Illinois Urbana-Champaign Alternates | 22,726 | |

| Institute of Botany, Chinese Academy of Sciences | 21,468 | |

| University of Florida, George A. Smathers Libraries | 20,827 | |

| Environmental Data Resources, Inc. | 20,259 | |

| Public.Resource.Org | 20,185 | |

| Smithsonian Libraries | 19,948 | |

| Eric P. Newman Numismatic Education Society | 18,781 | |

| NIST Research Library | 18,739 | |

| Open Knowledge Commons, United States National Library of Medicine | 18,091 | |

| Biodiversity Heritage Library | [13] | 17,979 |

| Ontario Council of University Libraries and Member Libraries | 17,880 | |

| Corporation of the Presiding Bishop, The Church of Jesus Christ of Latter-day Saints | 16,880 | |

| Leo Baeck Institute Archives | 16,769 | |

| North Carolina Digital Heritage Center | [14] | 14,355 |

| California State Library, Califa/LSTA Grant | 14,149 | |

| Duke University Libraries | 14,122 | |

| The Black Vault | 13,765 | |

| Buddhist Digital Resource Center | 13,460 | |

| John Carter Brown Library | 12,943 | |

| MBL/WHOI Library | 11,538 | |

| Harvard University, Museum of Comparative Zoology, Ernst Mayr Library | [15] | 10,196 |

| AFS Intercultural Programs | 10,114 |

In 2017, the MIT Press authorized the Internet Archive to digitize and lend books from the press's backlist,[53] with financial support from the Arcadia Fund.[54][55] A year later, the Internet Archive received further funding from the Arcadia Fund to invite some other university presses to partner with the Internet Archive to digitize books, a project called "Unlocking University Press Books".[56][57]

Media collections[edit]

In addition to web archives, the Internet Archive maintains extensive collections of digital media that are attested by the uploader to be in the public domain in the United States or licensed under a license that allows redistribution, such as Creative Commons licenses. Media are organized into collections by media type (moving images, audio, text, etc.), and into sub-collections by various criteria. Each of the main collections includes a "Community" sub-collection (formerly named "Open Source") where general contributions by the public are stored.

Audio collection[edit]

The Audio Archive includes music, audiobooks, news broadcasts, old time radio shows, and a wide variety of other audio files. There are more than 200,000 free digital recordings in the collection. The subcollections include audio books and poetry, podcasts,[58] non-English audio, and many others.[notes 64] The sound collections are curated by B. George, director of the ARChive of Contemporary Music.[59]

The Live Music Archive sub-collection includes more than 170,000 concert recordings from independent musicians, as well as more established artists and musical ensembles with permissive rules about recording their concerts, such as the Grateful Dead, and more recently, The Smashing Pumpkins. Also, Jordan Zevon has allowed the Internet Archive to host a definitive collection of his father Warren Zevon's concert recordings. The Zevon collection ranges from 1976–2001 and contains 126 concerts including 1,137 songs.[60]

The Great 78 Project aims to digitize 250,000 78 rpm singles (500,000 songs) from the period between 1880 and 1960, donated by various collectors and institutions. It has been developed in collaboration with the Archive of Contemporary Music and George Blood Audio, responsible for the audio digitization.[59]

Brooklyn Museum[edit]

This collection contains approximately 3,000 items from Brooklyn Museum.[notes 65]

Images collection[edit]

This collection contains more than 880,000 items.[notes 66]Cover Art Archive, Metropolitan Museum of Art - Gallery Images, NASA Images, Occupy Wall StreetFlickr Archive, and USGS Maps and are some sub-collections of Image collection.

Cover Art Archive[edit]

The Cover Art Archive is a joint project between the Internet Archive and MusicBrainz, whose goal is to make cover art images on the Internet. This collection contains more than 330,000 items.[notes 67]

Metropolitan Museum of Art images[edit]

The images of this collection are from the Metropolitan Museum of Art. This collection contains more than 140,000 items.[notes 68]

NASA Images[edit]

The NASA Images archive was created through a Space Act Agreement between the Internet Archive and NASA to bring public access to NASA's image, video, and audio collections in a single, searchable resource. The IA NASA Images team worked closely with all of the NASA centers to keep adding to the ever-growing collection.[61] The nasaimages.org site launched in July 2008 and had more than 100,000 items online at the end of its hosting in 2012.

Occupy Wall Street Flickr archive[edit]

This collection contains creative commons licensed photographs from Flickr related to the Occupy Wall Street movement. This collection contains more than 15,000 items.[notes 69]

USGS Maps[edit]

This collection contains more than 59,000 items from Libre Map Project.[notes 70]

Machinima archive[edit]

One of the sub-collections of the Internet Archive's Video Archive is the Machinima Archive. This small section hosts many Machinima videos. Machinima is a digital artform in which computer games, game engines, or software engines are used in a sandbox-like mode to create motion pictures, recreate plays, or even publish presentations or keynotes. The archive collects a range of Machinima films from internet publishers such as Rooster Teeth and Machinima.com as well as independent producers. The sub-collection is a collaborative effort among the Internet Archive, the How They Got Game research project at Stanford University, the Academy of Machinima Arts and Sciences, and Machinima.com.[notes 71]

Mathematics – Hamid Naderi Yeganeh[edit]

This collection contains mathematical images created by mathematical artist Hamid Naderi Yeganeh.[notes 72]

Microfilm collection[edit]

This collection contains approximately 160,000 items from a variety of libraries including the University of Chicago Libraries, the University of Illinois at Urbana-Champaign, the University of Alberta, Allen County Public Library, and the National Technical Information Service.[notes 73][notes 74]

Moving image collection[edit]

The Internet Archive holds a collection of approximately 3,863 feature films.[notes 75] Additionally, the Internet Archive's Moving Image collection includes: newsreels, classic cartoons, pro- and anti-war propaganda, The Video Cellar Collection, Skip Elsheimer's "A.V. Geeks" collection, early television, and ephemeral material from Prelinger Archives, such as advertising

What’s New in the Word Cookies for PC Archives?

Screen Shot

System Requirements for Word Cookies for PC Archives

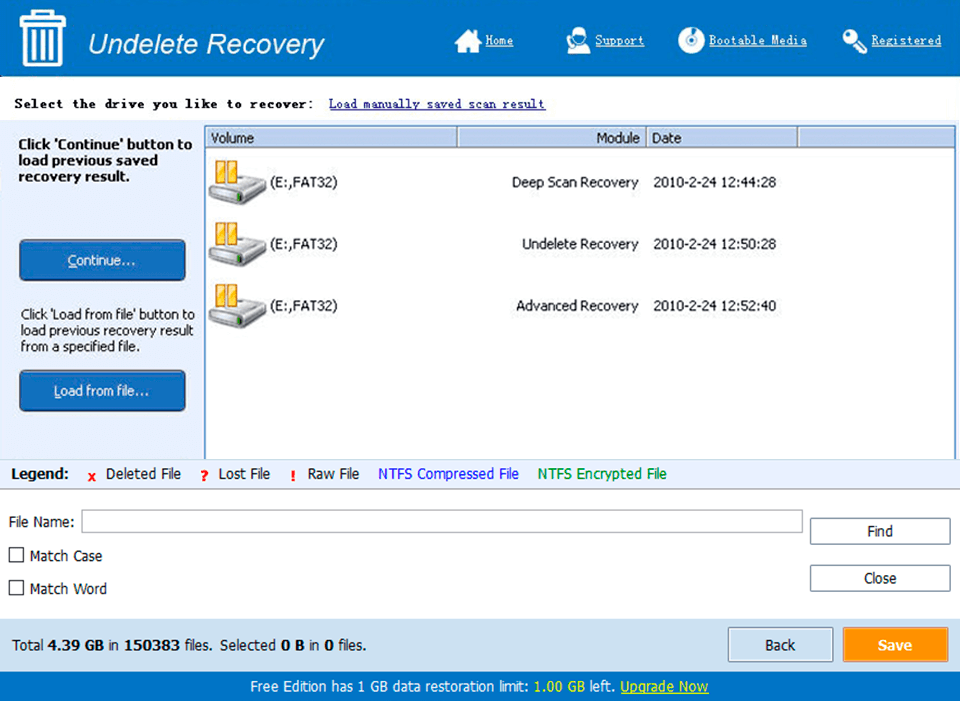

- First, download the Word Cookies for PC Archives

-

You can download its setup from given links: