Deepfake Software For PC Archives

Deepfake Software For PC Archives

The fight against deepfakes

Last week at the Black Hat cybersecurity conference in Las Vegas, the Democratic National Committee tried to raise awareness of the dangers of AI-doctored videos by displaying a deepfaked video of DNC Chair Tom Perez. Deepfakes are videos that have been manipulated, using deep learning tools, to superimpose a person’s face onto a video of someone else.

As the 2020 presidential election draws near, there’s increasing concern over the potential threats deepfakes pose to the democratic process. In June, the U.S. Congress House Permanent Select Committee on Intelligence held a hearing to discuss the threats of deefakes and other AI-manipulated media. But there’s doubt over whether tech companies are ready to deal with deepfakes. Earlier this month, Rep. Adam Schiff, chairman of the House Intelligence Committee, expressed concern that Google, Facebook, and Twitter don’t have no clear plan to deal with the problem.

Mounting fear over the potential onslaught of deepfakes has spurred a slate of projects and efforts to detect deepfakes and other image- and video-tampering techniques.

Inconsistent blinking

Deepfakes use neural networks to overlay the face of the target person on an actor in the source video. While neural networks can do a good job at mapping the features of one person’s face onto another, they don’t have any understanding of the physical and natural characteristics of human faces.

That’s why they can give themselves away by generating unnatural phenomena. One of the most notable artifacts is unblinking eyes. Before the neural networks that generate deepfakes can do their trick, their creators must train them by showing them examples. In the case of deepfakes, those examples are images of the target person. Since most pictures used in the training have open eyes, the neural network tend to create deepfakes that don’t blink, or that blink in unnatural ways.

Last year, researchers from the University of Albany published a paper on a technique for spotting this type of inconsistency in eye blinking. Interestingly, the technique uses deep learning, the same technology used to create the fake videos. The researchers found that neural networks trained on eye blinking videos could localize eye blinking segments in videos and examine the sequence of frames for unnatural movements.

However, with the technology becoming more advanced every day, it’s just a matter of time until someone manages to create deepfakes that can blink naturally.

Tracking head movement

More recently, researchers at UC Berkley developed an AI algorithm that detects face-swapped videos based on something that is much more difficult to fake: head and face gestures. Every person has unique head movements (e.g. nodding when stating a fact) and face gestures (e.g. smirking when making a point). Deepfakes inherit head and face gestures from the actor, not the targeted person.

A neural network trained on the head and face gestures of an individual would be able to flag videos that contain head gestures that don’t belong to that person. To test their model, the UC Berkley researchers trained the neural network on real videos of world leaders. The AI was able to detect deepfaked videos of the same persons with 92% accuracy.

Head movement detection provides a robust protection method against deep fakes. However, unlike the eye-blinking detector, where you train your AI model once, the head movement detector needs to be trained separately for every individual. So while it’s suitable for public figures such as world leaders and celebrities, it’s less ideal for general-purpose deepfake detection.

Pixel inconsistencies

When forgers tamper with an image or video, they do their best to make it look realistic. While image manipulation can be extremely hard to spot with the naked eye, it leaves behind some artifacts that a well-trained deep learning algorithm can detect.

Researchers at University of California, Riverside, developed an AI model that detects tampering by examining the edges of the objects contained in images. The pixels at the boundaries of objects that are artificially inserted into or removed from an image contain special characteristics, such as unnatural smoothing and feathering.

The UCR researchers trained their model on a large dataset containing annotated examples of untampered and tampered images. The neural network was able to glean common patterns that define the difference between the boundaries of manipulated and non-manipulated objects in images. When presented with new images, the AI was able to detect and highlight manipulated objects.

While the researchers tested this method on still images, it can potentially work on videos too. Deepfakes are essentially a series of manipulated image frames, so the same object manipulation artifacts exist in those individual frames, on the edges of the subject’s face.

Again, while this is an effective method to detect a host of different tampering techniques, it can become obsolete as deepfakes and other video-manipulation tools become more advanced.

Setting a baseline for the truth

While most efforts in the field focus on finding proof of tampering in videos, a different solution to fight deepfakes is to prove what’s true. This is the approach researchers at the UK’s University of Surrey used in Archangel, a project they are trialing with national archives in several countries.

Archangel combines neural networks and blockchain to establish a smart archive for storing videos so that they can be used in the future as a single source of truth. When a record is added to the archive, Archangel trains a neural network on various formats of the video. The neural network will then be able to tell whether a new video is the same as the original video or a tampered version.

Traditional fingerprinting methods verify authenticity of files by comparing them at byte level. This is not suitable for videos, whose byte structure changes when compressed in different formats. But neural networks learn and compare the visual features of the video, so it is codec-agnostic.

To make sure these neural network fingerprints themselves are not compromised, Archangel stores them on a permissioned blockchain maintained by the national archives participating in the trial program. Adding records to the archive requires consensus among the participating organizations. This ensures that no single party can unilaterally decide which videos are authentic. Once Archangel launches publicly, anyone will be able to run a video against the neural networks to check its authenticity.

The downside of this method is that it requires a trained neural network per video. This can limit its use because training neural networks takes hours and requires considerable computing power. It is nonetheless suitable for sensitive videos such as Congressional records and speeches by high-profile figures that are more likely to become the subject of tampering.

A cat-and-mouse game

While it’s comforting to see these and other efforts help protect elections and individuals against deepfakes, they are up against a fast-developing technology. As deepfakes continue to become more sophisticated, it’s unclear whether defense and detection methods will be able to keep up.

Ben Dickson is a software engineer and the founder of TechTalks, a blog that explores the ways technology is solving and creating problems.

This open-source program lets you run deepfakes on live video calls

Since the inception of deepfakes, lawmakers and security analysts have warned the public about how these eerily realistic clips can lead to exploitation, misinformation, and manipulation across the web. But what if people are bored during quarantine and want to play make-believe with their very own faces? A programmer named Ali Aliev is using open source code through the "First Order Motion Model for Image Animation" for his own program Avatarify, Motherboard reports.

The face-swap technology allows the user to impose anyone else's face on their own — with the bonus that it can be done in real-time on any Zoom or Skype video call. For his own part, Aliev has successfully tried being Elon Musk in a call with his buddies.

Endless possibilities — In a YouTube demo uploaded on April 8, Aliev noted that Avatarify has "a neural network [...] that requires a GPU to run smoothly." It isn't working so well for Mac users right now, but Aliev notes that streamlining it to other operating systems is one of his future projects. Those who can run Avatarify alongside Zoom or Skype, he says in his video, can become anyone from Einstein to Eminem, Steve Jobs to Mona Lisa.

Umm cool, but why — It seems to have started out as some innocuous fun. According to Aliev, his plan was to play pranks on his friends. In a comment to Motherboard, Aliev explained:

I ran [the First Order Model] on my PC and was surprised by the result. What’s important, it worked fast enough to drive an avatar real-time. Developing a prototype was a matter of a couple of hours and I decided to make fun of my colleagues with whom I have a Zoom call each Monday. And that worked. As they are all engineers and researchers, the first reaction was curiosity and we soon began testing the prototype.

The programmer says that Avatarify is intended to provide entertainment, and requires "a powerful gaming PC" to work. But the program could go mainstream as it gradually becomes optimized for laptops. Aliev says that it's "just a matter of time."

Deepfakes combined with Zoombombing — Aliev's intentions might be in the right place — a little fun never hurt anyone under a lockdown — but with hackers Zoombombing remote work and school sessions, politicians able to buy ads saying whatever they want on Facebook, and plenty of Americans and Russian's willing to use whatever means necessary to ensure Trump gets re-elected, Avatarify could easily be used for nefarious ends. Fortunately, for the time being at least, it can't fake a voice. But that's likely just a matter of time, too.

wbur

Professor Hao Li used to think it could take two to three years for the perfection of deepfake videos to make copycats indistinguishable from reality.

But now, the associate professor of computer science at the University of Southern California, says this technology could be perfected in as soon as six to 12 months.

Deepfakes are realistic manipulated videos that can, for example, make it look a person said or did something they didn’t.

“The best possible algorithm will not be able to distinguish,” he says of the difference between a perfect deepfake and real videos.

Li says he's changed his mind because developments in computer graphics and artificial intelligence are accelerating the development of deepfake applications.

A Chinese app called Zao, which lets users convincingly swap their faces with film or TV characters right on their smartphone, impressed Li. When ZAO launched on Aug. 30, a Friday, it became the most downloaded app in China’s iOS app store over the weekend, Forbes reports.

“You can generate very, very convincing deepfakes out of a single picture and also blend them inside videos and they have high-resolution results,” he says. “It's highly accessible to anyone.”

Interview Highlights

On the problems with deepfakes

“There are two specific problems. One of them is privacy. And the other one is potential disinformation. But since they are curating the type of videos where you put your face into it, so in that case, this information isn't really the biggest concern.”

On the threat of fake news

“You don't really need deepfake videos to spread disinformation. I don't even think that deepfakes are the real threat. In some ways, by raising this awareness by showing the capabilities, deepfakes are helping us to think about if things are real or not.”

On whether deepfakes are harmful

“Maybe we shouldn't really focus on detecting if they are fake or not, but we should maybe try to analyze what are the intentions of the videos.

“First of all, not all deepfakes are harmful. Nonharmful content is obviously for entertainment, for comedy or satire, if it's clear. And I think one thing that would help is … something that is based on AI or something that's data-driven that is capable of discerning if the purpose is not to harm people. That's a mechanism that has to be put in place in domains where the spread of fake news could be the most damaging and I believe that some of those are mostly in social media platforms.”

On the importance of people understanding this technology

“This is the same like when Photoshop was invented. It was never designed to deceive the public. It was designed for creative purposes. And if you have the ability to manipulate videos now, specifically targeting the identity of a person, it's important to create awareness and that's sort of like the first step. The second step would be we have to be able to flag certain content. Flagging the content would be something that social media platforms have to be involved in.

“Government agencies like DARPA, Defense Advanced Research Projects Agency, their purpose is basically to prepare America against potential threats at a technological level. And now in a digital age, one of the things that they're heavily investing into is, how to address concerns around disinformation? In 2015, they started a program called MediFor for media forensics and the idea is that while now we have all the tools that allow us to manipulate images, videos, multimedia, what can we do to detect those? And at the same time, AI advanced so much specifically in the area of deep learning where people can generate photorealistic content. Now they are taking this to another level and starting a new program called SemaFor, which is semantic forensics.”

On why the idea behind a deepfake is valuable

“Deepfake is a very scary word but I would say that the underlying technology is actually important. It has a lot of positive-use cases, especially in the area of communication. If we ever wanted to have immersive communication, we need to have the ability to generate photo-realistic appearances of ourselves in order to enable that. For example, in the fashion space, that's something that we're working on. Imagine if you ever wanted to create a digital twin of yourself and you wanted to see yourself in different clothing and do online shopping, really virtualizing the entire experience. And deepfake specifically are focusing on video manipulations, which is not necessarily the primary goal that we have in mind.”

On whether he feels an obligation to play a role in combating the use of deepfakes for spreading disinformation

“First of all, we develop these technologies for creating, for example, avatars right, which is one thing that we're demonstrating with our startup company called Pinscreen. And the deepfakes are sort of like a derivative. We were all caught by surprise.

“Now you have these capabilities and we really have an additional responsibility in terms of what are the applications. And this goes beyond our field. This is an overall concern in the area of artificial intelligence.”

Ciku Theuri produced and edited this interview for broadcast with Kathleen McKenna. Allison Hagan adapted it for the web.

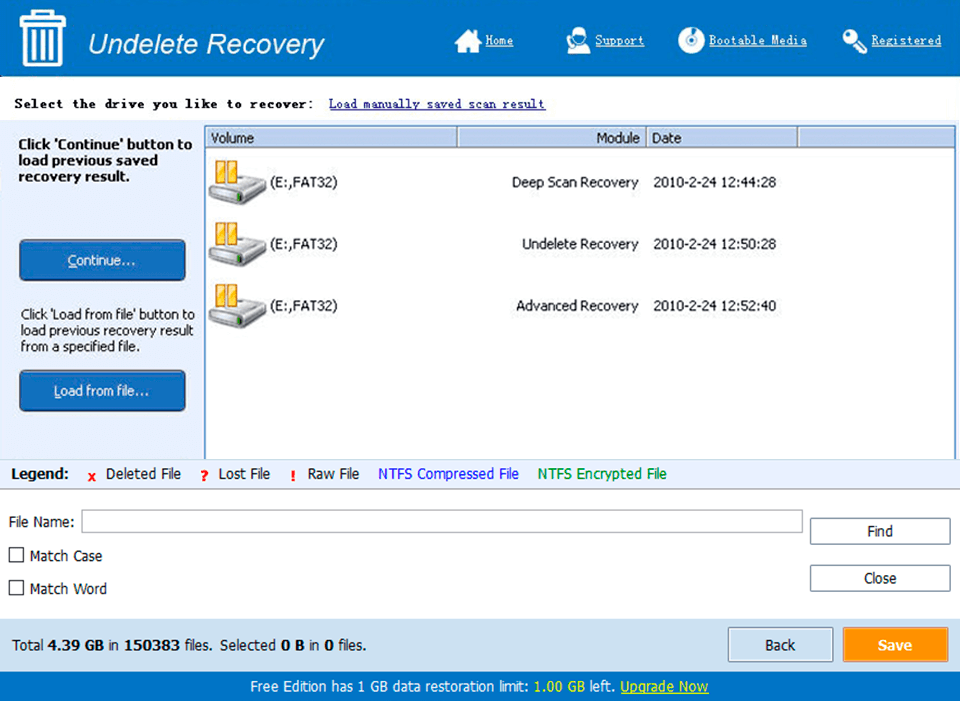

What’s New in the Deepfake Software For PC Archives?

Screen Shot

System Requirements for Deepfake Software For PC Archives

- First, download the Deepfake Software For PC Archives

-

You can download its setup from given links: